ALIREZA NADERI AKHORMEH

BAI JUN KEVIN GUAN-ZHIDE

KOUROSH RIAHIDEHKORDI

ANDRES FRANCISCO HIDALGO ROMERO

Myrna Citlali Castillo Silva

Remi Antoine Cambuzat

Andrea Bennati

Sunny Katyara

Dario Mazzanti

Abdeldjallil Naceri

Patricia Yáñez Piqueras

Brendan Emery

Mohammad Fattahi Sani

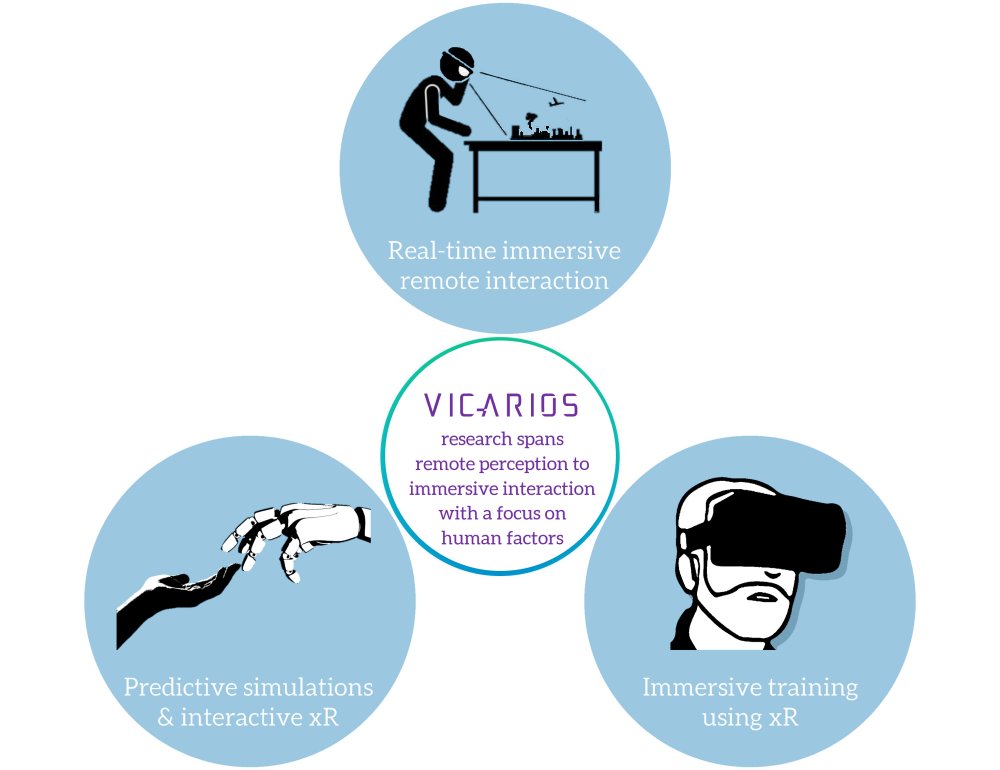

To enhance Real-time Immersive Interaction in Telerobotics

We study how the combination of immersive mixed reality (MR) interfaces, intuitive control devices, real-time data from remote sensors (RGB-D cameras, microphones, F/T sensors, etc.) can allow high-fidelity in the perception-action loop, offering a real-time immersive interaction experience to the human user in telerobotics applications.

To advance state-of-the-art in Immersive Training with eXtended Reality (xR)

VR serves as a promising technology demonstration for safety training, providing risk free, immersive learning, among other features. We explore how xR technologies, combining VR with spatially contextualized physical interaction, can help make training sessions more effective in the acquisition of safety behaviour, while increasing the trainee’s engagement.

To understand Human Interaction Behaviour through Predictive Simulation and xR

The overarching objective of our research is to improve our knowledge of how humans interact with remote environments, real or virtual. We investigate how predictive full-body biomechanical simulations, biophysiological parameter tracking, combined with xR can help understand a human user’s behaviour during their interaction with remote environments.

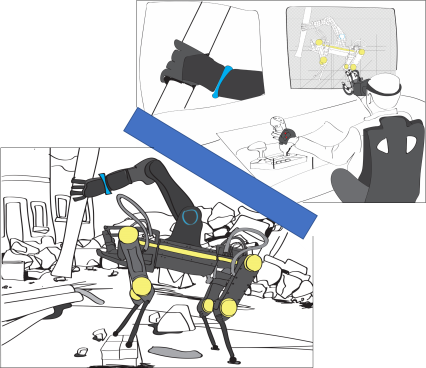

Safety in Hazardous Environments with Dual-Arm Precision with Quadrooped

The “Robot Teleoperativo 2” project outcome. This project aimed to develop a novel, collaborative teleoperation hardware and software system dedicated to operating in hazard-prone environments, reducing risks to people’s safety and well-being.

Robot Manipulators, Multi-cam streaming, Jetson AGX Xavier, HTC Vive Pro, UE4, Point-cloud streaming, Telepresence, Gstreamer, FFmpeg

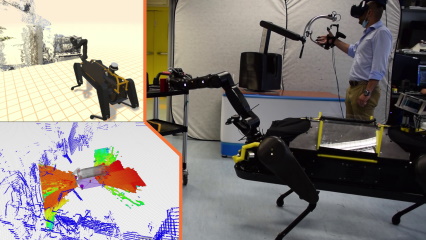

Immersive Remote Telerobotics (IRT) Interface

The interface facilitates intuitive real-time remote teleoperation, while utilizing the inherent benefits of VR, including immersive visualization, freedom of user viewpoint selection, and fluidity of interaction through natural action interfaces

Robot Manipulators, Multi-cam streaming, Jetson AGX Xavier, HTC Vive Pro, UE4, Point-cloud streaming, Telepresence, Gstreamer, FFmpeg

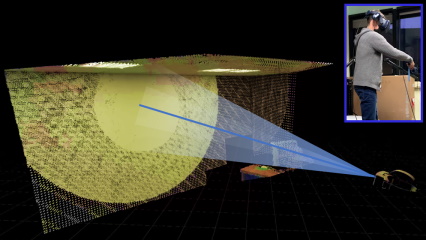

Immersive Remote Visualization using Foveated Rendering

A remote 3D data visualization framework that utilizes the natural acuity fall-off in the human eyes to facilitate the processing, transmission, buffering, and rendering in VR of dense point-clouds / 3D reconstructed scenes.

Sampling, ElasticFusion, HTC Vive Pro Eye (Gaze tracking), CUDA, OpenGL-SL, OpenMP, Gstreamer, FFmpeg, UE4

Encountered-type Haptic Interface for Telemanipulation

An interface that enhances the telepresence of an operator engaged in immersive haptic telemanipulation, facilitating intuitive interaction by enabling unrestricted operator motion through encountered-type haptic feedback.

Bilateral haptic teleoperation, Encountered-type haptics, Gesture-based tele-grasping, Meta Quest 2, Meta Quest pro, UE4, UE5, ROS

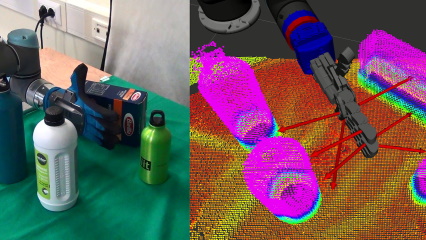

Truncated Signed Distance Fields (TSDFs), 3D Reconstruction, and real-time haptic feedback in remote exploration applications

A unified system for simultaneously estimating obstacle avoidance vectors while generating a TSDF-based 3D reconstruction of the environment, in real-time

TSDF, Open3D, CUDA, ROS, Real-time 3D reconstruction, Robot Manipulators, “Feel-where-you-don't-see"

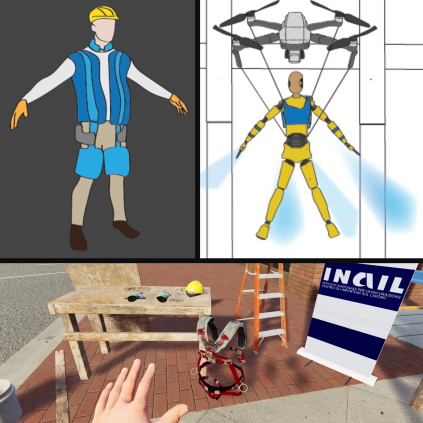

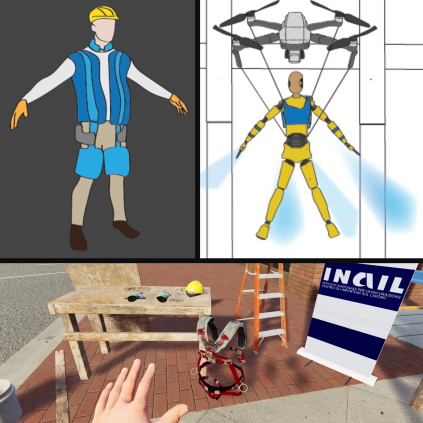

Mixed Reality Training for Industrial Workers

In the field of safety training, immersive, virtual training environments are seen to be more effective than conventional methods, when it comes to the acquisition of safety behaviour, and can increase trainee’s engagement.

UE4, Quest System, Cyberith Virtualizer, HTC Vive Focus 3, Oculus Quest 2

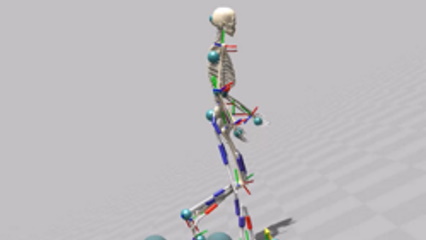

Predictive Full-body Biomechanical Motion Simulation (PFM-Sim)

Simulations are an important means to study biomechanical phenomena in industrial scenarios, e.g., falling from heights. “What If?” simulations help predict new outcomes using the simulations of the technologies being designed.

OpenSim, SCONE, Matlab / Simulink, Musculoskeletal model

ROBOT ASSISTED INSPECTION OF PRESSURE EQUIPMENT (RIAP)

These projects aim to design novel immersive robotic systems to enhance inspections and maintenance of hard-to-access pressure equipment (AiP), minimizing risks to personnel in hazardous environments.

Role: Project Collaboration

Duration: 1-Apr-2024 -to- 31-Mar-2027

Sponsor: Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro (INAIL)

More Details: https://advr.iit.it/projects/inail-scc/teleoperazione

Robot Teleoperativo 3

This project builds upon the outcomes of the Robot Teleoperativo 1 and 2 projects, further refining the developed technologies for high-TRL applications through extensive field testing and real-world use-case demonstrations.

Role: Project Collaboration

Duration: 1-Apr-2024 -to- 31-Mar-2027

Sponsor: Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro (INAIL)

More Details: https://advr.iit.it/projects/inail-scc/teleoperazione

Caduta dall’Alto -2

(Falling from Heights)

This project builds on the first initiative by designing and enhancing novel strategies to prevent accidents and protect workers at heights, with a focus on advancements in mixed reality simulators.

Role: Project Co-Coordinator

Duration: 1-Apr-2024 -to- 31-Mar-2027

Sponsor: Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro (INAIL)

Robot Teleoperativo 2

This project advances the results from the Robot Teleoperativo 1 project, bringing the developed technologies closer to the application domain through extensive field testing and use-case demonstrations.

Role: Project Coordinator

Duration: 1-Jan-2021 -to- 31-Dec-2023

Sponsor: Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro (INAIL)

More Details: https://advr.iit.it/projects/inail-scc/teleoperazione

Caduta dall’Alto

(Falling from Heights)

The project is aimed at the design and development of novel strategies and solutions aimed at preventing accidents as well as protecting workers working at heights. The project will focus on advancements in wearable sensing & actuation technologies, including technologies for fall impact reduction and smart monitoring, as well as on new paradigms in immersive training for workers.

Role: Project Co-Coordinator

Duration: 1-Jan-2021 -to- 31-Dec-2023

Sponsor: Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro (INAIL)

Robot Teleoperativo 1

This project aims at enhanced occupational safety in hazardous environments through substitution, i.e., removing the worker from the unsafe area and having robotic technologies do the same tasks through remote robotic teleoperation. To achieve this goal, a collaborative robotic system is proposed, composed of a mobile manipulator FIELD robot, teleoperated from the immersive haptic PILOT station,

Role: Project Coordinator

Duration: 1-Sep-2017 -to- 31-Dec-2020 (Completed)

Sponsor: Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro (INAIL)

More Details: https://advr.iit.it/projects/inail-scc/teleoperazione

2025

- Natnael Berhanu et al., “MiXR-Interact: Mixed Reality Interaction Dataset for Gaze, Hand, and Body”, In HRI 2025 Workshop VAM-HRI, March 1, 2025, Melbourne, Australia. <accepted>

2024

- Evan Ackerman, “Dual-Arm HyQReal Puts Powerful Telepresence Anywhere IIT’s hydraulic quadruped can carry a pair of massive arms”, https://spectrum.ieee.org/telepresence-robot <>

- Yonas Tefera et al., “Immersive Remote Telerobotics: Foveated Unicasting and Remote Visualization for Intuitive Interaction”, Robotica. <accepted>

- Yonas Tefera et al., “Combattere Le Fiamme Con Robot”, https://www.vigilfuoco.tv/sites/default/files/noi_rivista/pdf/Noi_35_web.pdf <>

- Yonas Tefera et al., “ROBOT TELEOPERATIVO: Collaborative Cybernetic Systems for Immersive Remote Teleoperation”, In the 2024 IEEE Conference on Telepresence, Nov 16-17, 2024, Pasadena, California, USA. <accepted>

- D Ludovico et al., “Novel Method for the Optimal Design of a Robotic Neck Mechanism to Mimic Human Neck Motion: a Data-Driven Approach”, In IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Sep 2, 2024, Genova, Italy. <accepted>

- AN Akhormeh et al., “Wearable Drone as a Fall Arresting Device: Preliminary Concept and Feasibility Analysis”, In IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Sep 2, 2024, Genova, Italy. <accepted>

2023

- Yaesol Kim et al., “Towards Immersive Bilateral Teleoperation Using Encountered-Type Haptic Interface”, In IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oct 1-4, 2023, Honolulu, USA. <accepted>

- Andrés Hidalgo et al., “Predictive Simulations of a Wearable Balance Assistance Device in Neuro-Musculoskeletal Models”, In IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oct 1-4, 2023, Honolulu, USA. <accepted>

- Alireza Naderi et al., “Conceptual Design and Simulation of Cold Gas Thrusters As Wearable Fall Arresting Devices” In IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oct 1-4, 2023, Honolulu, USA. <accepted>

- Yonas Tefera et al., “Towards Gaze-contingent Visualization of Real-time 3D Reconstructed Remote Scenes in Mixed Reality”, AIR 2023 - Advances in Robotics, 6th International Conference of the Robotics Society, Jul 5–8, 2023, IIT-Ropar, India. <accepted> <pdf> <bibtex>

- Alireza Naderi et al., “Conceptual Design of a Cold Gas Thruster unit to Mitigate the Falling Velocity in Low Height Falls”, STF 2023 - Slips, Trips, and Falls International Conference, Jun 1-2, 2023, Toronto, Canada. <pdf> <bibtex>

- Andrés Hidalgo et al., “Predictive Simulations of Human Balancing against Falling using Wearable Gyroscopic Systems”, STF 2023 - Slips, Trips, and Falls International Conference, Jun 1-2, 2023, Toronto, Canada. <pdf> <bibtex>

- Yaesol Kim et al., “Towards Encountered-type Haptic Interaction for Immersive Bilateral Telemanipulation”, In 6th International Workshop on Virtual, Augmented, and Mixed-Reality for Human-Robot Interactions (VAM-HRI), Mar. 13, 2023, Stockholm, Sweden. <pdf> <bibtex>

2022

- Yonas Tefera et al., “FoReCast: Real-time Foveated Rendering and Unicasting for Immersive Remote Telepresence”, ICAT-EGVE 2022 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, Dec. 1, 2022, Yokohama, Japan. <pdf> <bibtex>

- Yonas Tefera et al., “A Perception-Driven Approach To Immersive Remote Telerobotics”, In 1st International XR-ROB Workshop @ IEEE/RSJ IROS, Oct. 20, 2022, Kyoto, Japan. <pdf> <bibtex>

- Yaesol Kim et al., “End-to-End Encountered-type Haptic Response Generation Using Learning from Demonstrations in Virtual Reality”, In 1st International XR-ROB Workshop @ IEEE/RSJ IROS, Oct. 20, 2022, Kyoto, Japan. <pdf> <bibtex>

- Laura Salatino et al., “Spatial Augmented Respiratory Cardiofeedback Design for Prosthetic Embodiment Training: a Pilot Study”, In IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oct. 9-12, 2022, Prague, Czech Republic. <pdf> <bibtex>

- Yonas Tefera et al., “Towards Foveated Rendering for Immersive Remote Telerobotics”, In 5th International Workshop on Virtual, Augmented, and Mixed-Reality for Human-Robot Interactions (VAM-HRI), Mar. 7, 2022, Sapporo, Japan. <pdf> <bibtex>

2021

- Sunny Katyara et al., “Fusing Visuo-Tactile Perception into Kernelized Synergies for Robust Grasping and Fine Manipulation of Non-rigid Objects,” arXiv preprint <pdf> <bibtex> [Collab. w/APRIL Lab]

- A Naceri et al., “The Vicarios Virtual Reality Interface for Remote Robotic Teleoperation,” Journal of Intelligent & Robotic Systems 101 (4), pp. 1-16. <bibtex>

- G. Barresi et al., “Exploring the Embodiment of a Virtual Hand in a Spatially Augmented Respiratory Biofeedback Setting,” Frontiers in Neurorobotics, 114. [Collab. w/ Rehab Technologies] <bibtex>

- L. S. Mattos et al., “μRALP and beyond: Micro-technologies and systems for robot-assisted endoscopic laser microsurgery,” Frontiers in Robotics and AI, 240. [Collab. w/ Biomedical Robotics Lab]. <bibtex>

2020

- A. Acemoglu et al., “Operating from a distance: robotic vocal cord 5G telesurgery on a cadaver,” Annals of internal medicine 173 (11), 940-941. [Collab. w/ Biomedical Robotics Lab ]. <bibtex>

- J. Lee et al., “Microscale precision control of a computer-assisted transoral laser microsurgery system,” IEEE/ASME Transactions on Mechatronics 25 (2), 604-615 [Collab. w/ Biomedical Robotics Lab]. <bibtex>

2019

- M. F. Sani et al., “Towards Sound-source Position Estimation using Mutual Information for Next Best View Motion Planning,” 2019 19th International Conference on Advanced Robotics (ICAR), pp. 24-29. <bibtex>

- A. Naceri et al., “Towards a virtual reality interface for remote robotic teleoperation,” 2019 19th International Conference on Advanced Robotics (ICAR), pp. 284-289. <bibtex>

- A. Acemoglu et al., “The CALM system: New generation computer-assisted laser microsurgery,” 2019 19th International Conference on Advanced Robotics (ICAR), pp. 641-646 [Collab. w/ Biomedical Robotics Lab]. <bibtex>

Get In Touch!

Advanced Robotics

Istituto Italiano di Tecnologia (IIT)

Via Morego 30, 16163, Genova, Italia

.jpg)